I have dipped my toes in compute shaders in the past, but by not working on shaders for a long period, I slowly

forgot exactly how they work. I wanted to re-learn the basics, so that I can use them for more complex projects in the future.

I believe one of the best way to learn and retain information is to either explain it, or to demo it. This is a mixture of both.

Important Information

This is meant for someone who doesn't know much about compute shaders and GPGPU. As I have little experience

with the topic, some of the code might not be perfectly optimized or best practice. Some information (or my understanding of it) might also

not be completely accurate, as I am far from an expert as of writing. For this reason I included links to more information

throughout the page. This is more of a project for me to (re)learn the basics of GPGPU and most importantly, have fun with it!

You will need basic familiarity with Unity to follow this project, as I focus on the algorithms rather than the setup in Unity editor.

Before we begin with the simulation lets first display two bodies, so we can see whats going on. We'll choose quads, because they are one of the simplest mesh(two triangles) and they are therefore very easy to draw for our gpu. We will simply instantiate them in our start method for now.

using System.Collections.Generic;

using UnityEngine;

public class NBodySimulation : MonoBehaviour

{

List<Transform> quadBodies;

// Start is called before the first frame update

void Start()

{

quadBodies = new List<Transform>();

//instantiate bodies

var newBody = GameObject.CreatePrimitive(PrimitiveType.Quad);

newBody.transform.parent = this.transform;

newBody.transform.localScale = Vector3.one * 0.5f;

newBody.transform.position = Vector3.right;

quadBodies.Add(newBody.transform);

newBody = GameObject.CreatePrimitive(PrimitiveType.Quad);

newBody.transform.parent = this.transform;

newBody.transform.localScale = Vector3.one * 0.5f;

newBody.transform.position = Vector3.left;

quadBodies.Add(newBody.transform);

}

}

We can now start with the simulation.

We will use both Newton's law of universal gravitation

and the equations of motion.

The equations we will need are:

\(

F_g=G\frac{m_1 m_2}{r^2},\hspace{1em} G = 6.67430 × 10 ^{-11} m^3 kg^{-1} s^{-2}

\)

\(

\vec{F}=m\vec{a}

\)

\(

x=x_0+v_0t+ \frac{1}{2}at^2

\)

\(

v=v_0+at

\)

We can now assign mass, acceleration, velocity, and position variables to each of our body to implement these equations.

using UnityEngine;

class Body

{

public Vector3 position { get; private set; }

Vector3 velocity;

Vector3 acceleration;

float mass;

public Body(Vector3 position, Vector3 velocity, float mass)

{

this.position = position;

this.velocity = velocity;

this.acceleration = Vector3.zero;

this.mass = mass;

}

}

We can finally implement the above equations in the code. We will approach the problem this way: First we will calculate the total force applied from all other bodies to this body. We will then use this force and the mass to calculate the acceleration. Using the acceleration, we will update the position and velocity of the body. We can separate these three step in two functions:

class Body

{

public void KinematicsUpdate(ICollection<Body> bodies, float timeElapsed)

{

UpdateAcceleration(bodies);

UpdatePosition(timeElapsed);

}

private void UpdateAcceleration(ICollection<Body> bodies)

{

Vector3 totalForce = Vector3.zero;

foreach (var b in bodies)

{

if (b != this)//we dont compute gravity on self, this will result in division by zero

{

//calculate magnitude and direction of force

Vector3 directionVector = b.position - this.position;

float distance = directionVector.magnitude;

totalForce += 6.67430e-11f * b.mass * this.mass * directionVector.normalized / (distance * distance);

}

}

acceleration = totalForce / mass;

}

private void UpdatePosition(float timeElapsed)

{

position += timeElapsed * velocity + 0.5f * timeElapsed * timeElapsed * acceleration;

velocity += timeElapsed * acceleration;

}

}

Coming back to the simulation, we only need to match these bodies with the quads we created earlier, and call the kinematics update for each body in Unity's update method. Let's modify our start method: We will start with two bodies of mass 1e10, 2 meters apart and see what happens.

public class NBodySimulation : MonoBehaviour

{

List<Transform> quadBodies;

List<Body> bodies;

// Start is called before the first frame update

void Start()

{

quadBodies = new List<Transform>();

bodies = new List<Body>();

//instantiate bodies

var newBody = GameObject.CreatePrimitive(PrimitiveType.Quad);

newBody.transform.parent = this.transform;

newBody.transform.localScale = Vector3.one * 0.5f;

quadBodies.Add(newBody.transform);

newBody = GameObject.CreatePrimitive(PrimitiveType.Quad);

newBody.transform.parent = this.transform;

newBody.transform.localScale = Vector3.one * 0.5f;

quadBodies.Add(newBody.transform);

bodies.Add(new Body(Vector3.left, Vector3.zero, 1e10f));

bodies.Add(new Body(Vector3.right, Vector3.zero, 1e10f));

}

private void Update()

{

for (int i = 0; i < bodies.Count; i++)

{

bodies[i].KinematicsUpdate(bodies, Time.deltaTime);

quadBodies[i].transform.position = bodies[i].position;

}

}

}

We can see that the simulation looks good at the begining, but one of the bodies shoots off at the end. This is because as the bodies get closer,

the distance squared gets really small which create a giant force in the division of the UpdateAcceleration() method.

Lets fix that with a condition to ignore bodies that are too close: (ln 17)

class Body

{

float distanceThreshold = 0.1f;

private void UpdateAcceleration(ICollection<Body> bodies)

{

Vector3 totalForce = Vector3.zero;

foreach (var b in bodies)

{

if (b != this)//we dont compute gravity on self, this will result in division by zero

{

//calculate magnitude and direction of force

Vector3 directionVector = b.position - this.position;

float distance = directionVector.magnitude;

if (distance < distanceThreshold) continue;

totalForce += 6.67430e-11f * b.mass * this.mass * directionVector.normalized / (distance * distance);

}

}

acceleration = totalForce / mass;

}

}

We can also try to modify the initial velocity and mass of our bodies for a more interesting simulation.

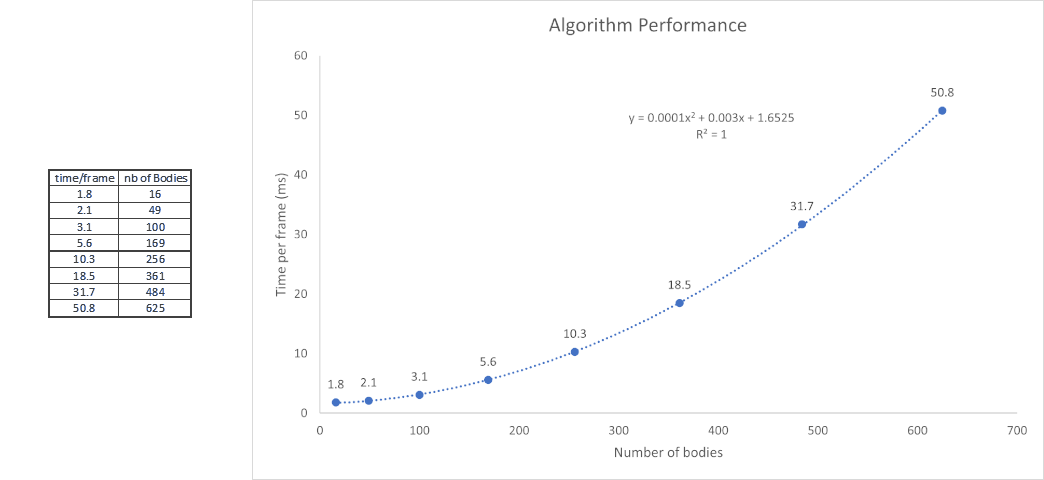

Now that our code works as expected, lets try to upscale this and see how many bodies we can simulate.

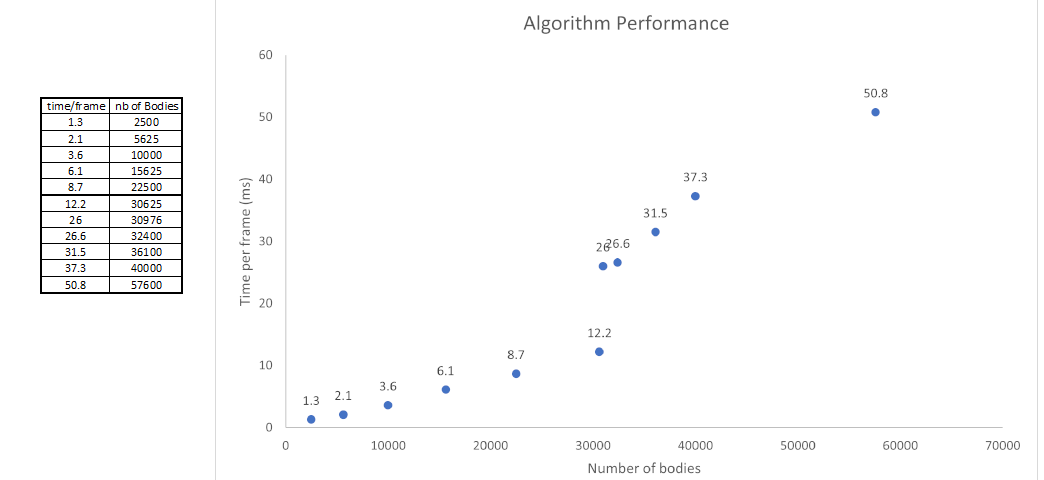

We can use the number of bodies simulated and the time it takes to calculate a frame as a measure of performance.

This measurement will vary depending of your hardware, but we can use it as long as we always compare simulation

numbers from the same machine.

That's pretty neat! However, with 625 bodies in a 25*25 grid, the fps dropped to around 20 (50ms/frame). Of course, we can do better.

Lets examine how we could improve the code.

There are some small optimization we can do do our force calculation:

Notice that in the force calculation equation, our masses are always multiplied by constant G.

\(

F_g=G\frac{m_1 m_2}{r^2}

\)

We can scale our simulation and by removing this multiplication and adjusting the mass of bodies in our start method.

Although we save an operation, multiplication in modern processors is extremely fast and this will have almost no impact

on the simulation performance. Our algorithm runs in quadratic time

O(n2),

since we iterate through n bodies for every nth body. The biggest problem in our case is therefore the number of bodies.

We can also notice that we have duplicate data. We have the body's position in the Body class, but we also have it for each quad. This will be addressed in the next section.

Here is the final code:

using System.Collections.Generic;

using UnityEngine;

class Body

{

public Vector3 position { get; private set; }

Vector3 velocity;

Vector3 acceleration;

float mass;

float distanceThreshold = 0.1f;

public Body(Vector3 position, Vector3 velocity, float mass)

{

this.position = position;

this.velocity = velocity;

this.acceleration = Vector3.zero;

this.mass = mass;

}

public void KinematicsUpdate(ICollection<Body> bodies, float timeElapsed)

{

UpdateAcceleration(bodies);

UpdatePosition(timeElapsed);

}

private void UpdateAcceleration(ICollection<Body> bodies)

{

Vector3 totalForce = Vector3.zero;

foreach (var b in bodies)

{

if (b != this)//we dont compute gravity on self, this will result in division by zero

{

//calculate magnitude and direction of force

Vector3 directionVector = b.position - this.position;

float distance = directionVector.magnitude;

if (distance < distanceThreshold) continue;

totalForce += b.mass * this.mass * directionVector.normalized / (distance * distance);

}

}

acceleration = totalForce / mass;

}

private void UpdatePosition(float timeElapsed)

{

position += timeElapsed * velocity + 0.5f * timeElapsed * timeElapsed * acceleration;

velocity += timeElapsed * acceleration;

}

}

using System.Collections.Generic;

using UnityEngine;

public class NBodySimulation : MonoBehaviour

{

List<Transform> quadBodies;

List<Body> bodies;

int gridsSize = 25;

// Start is called before the first frame update

void Start()

{

quadBodies = new List<Transform>();

bodies = new List<Body>();

for (int i = 0; i < gridsSize; i++)

{

for (int j = 0; j < gridsSize; j++)

{

//instantiate bodies

var newBody = GameObject.CreatePrimitive(PrimitiveType.Quad);

newBody.transform.parent = this.transform;

newBody.transform.localScale = Vector3.one * 0.5f;

quadBodies.Add(newBody.transform);

Vector3 position = new Vector3(-gridsSize / 2 + i, -gridsSize / 2 + j) * 1.5f;

bodies.Add(new Body(position, (Random.insideUnitSphere + Vector3.Cross(position, Vector3.back).normalized), 1e-1f));

}

}

}

private void Update()

{

for (int i = 0; i < bodies.Count; i++)

{

bodies[i].KinematicsUpdate(bodies, Time.deltaTime);

quadBodies[i].transform.position = bodies[i].position;

}

}

}

One of the main problems with our O(n2) algorithm is that we do the whole calculation for each

body sequentially, even though a body does not depend on other's computation to do its own. To fix this,

we can use parallel processing to compute many bodies simultaneously.

A way to do this is to use hardware that is excellent at this type of parallel computations: the Graphics Processing Unit

(GPU).

GPUs are used most often for image processing and rendering 3D objects (such as our quads from the last section) using

shaders. However

we can compute arbitrary information instead of graphics with

compute shaders.

This is known as GPGPU

(General-purpose computing on graphics processing units) and has many applications.

Similarly to the last section, we will start by being able to see the bodies before tackling the simulation. In the last section,

we let Unity take care of creating and rendering the quads for us. However we know that the GPU is used for rendering the 3D objects,

and that all of our simulation will run on the GPU. If we use the same type of visualization as in part 1, we will have to get the bodies'

position data from the GPU to update our GameObjects, only for it to be sent to the GPU again for rendering. Each data transfer can take some

time and this is clearly inneficient. For this reason, we will take care of rendering ourselves, so that the data stays in the GPU's memory.

Since we want to share the data between our compute shader and our rendering shader, we will use Unity's

Graphics.DrawProcedural

method.

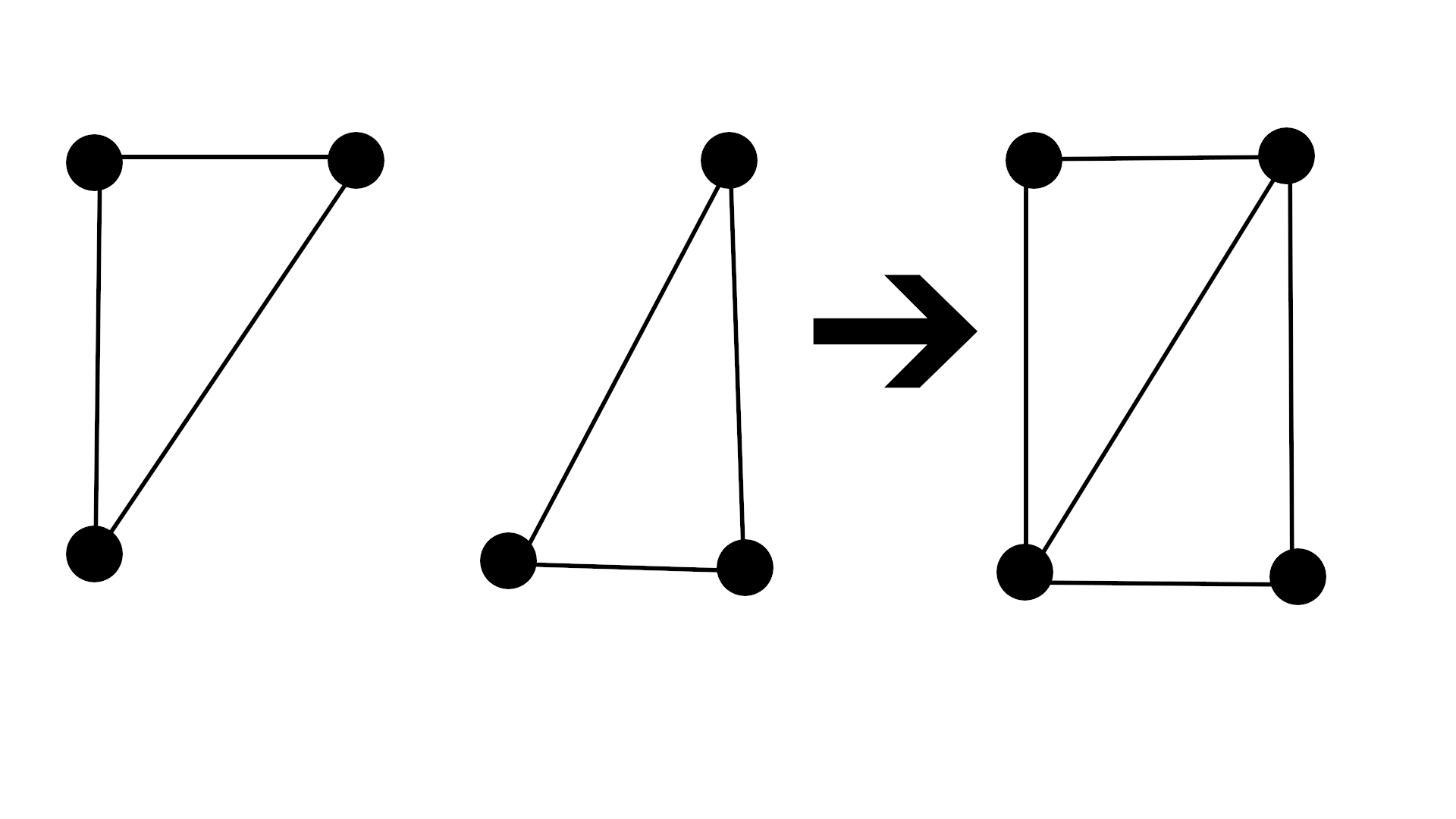

First we need a

Mesh.

Our mesh will be composed of 2 triangles, so 6 vertices in total.

Here is the code for the rendering shader. I have left comments in the code so it's easier to follow,

however I will not explain the whole thing here. There are a lot of resources online for shader basics.

This is a simple unlit shader, with a rotation transform so the quads always face the camera. Since we have access to the simulation data,

we can modify the color of each body according to their acceleration and their mass, which will make the simulation look a bit nicer.

Shader "Unlit/ProceduralBody"

{

Properties

{

_Color("Main Color", Color) = (1,1,1,1)

}

SubShader

{

Tags { "Queue" = "Geometry" "RenderType" = "Opaque"}

Pass

{

CGPROGRAM

#pragma target 5.0

#pragma vertex vert

#pragma fragment frag

#include "UnityCG.cginc"

struct Body

{

float3 pos;//position

float3 vel;//velocity

float3 acc;//acceleration

float mass;

};

struct v2f

{

uint id : SV_INSTANCEID;

float4 vertex : SV_POSITION;

};

float3 faceCamera(float3 position, float3 centerPosition)

{

//get direction to face

float3 norm = normalize(_WorldSpaceCameraPos - centerPosition);

//get axis to rotate on

//axis to rotate on is orthogonal to original facing direction and new direction to face

float3 axis = normalize(cross(norm, float3(0, 0, 1)));

//since both vector are normalized, no need to divide by magnitude

// a . b = |A|*|B|*cos(angle)

float angle = acos(dot(norm, float3(0, 0, -1)));

//rotate position of vertex

//https://en.wikipedia.org/wiki/Rotation_matrix#Rotation_matrix_from_axis_and_angle

float3 rotatedPos = axis * (dot(axis, position)) +

cross(cos(angle) * (cross(axis, position)), axis) +

sin(angle) * (cross(axis, position));

return rotatedPos;

}

float4 _Color;

StructuredBuffer<float3> quadVertices;

StructuredBuffer<Body> bodies;

//vertex_id-> id of vertex. We have six vertex so this will range from 0 to 5.

//instance_id-> id of instance. The number of instances is the number of bodies we have. This will range from 0 to numberOfBodies-1.

v2f vert(uint vertex_id: SV_VertexID, uint instance_id : SV_InstanceID)

{

v2f o;

//get vertex position

float3 position = quadVertices[vertex_id];

//make quad face camera

position = faceCamera(position, bodies[instance_id].pos);

//add body position

position += bodies[instance_id].pos;

//convert the vertex position from world space to clip space

o.vertex = UnityObjectToClipPos(float4(position.xyz, 1));

o.id = instance_id;

return o;

}

fixed4 frag(v2f i) : SV_Target

{

//modify color depending on acceleration and mass

fixed accCol = length(bodies[i.id].acc) * 100;

fixed massCol = bodies[i.id].mass / 15000000;

return _Color + fixed4(0, massCol * 0.5f + accCol * 0.5f, accCol, 1);

}

ENDCG

}

}

}

We will also need a C# struct to match the shader struct.

using UnityEngine;

public struct Body

{

public Vector3 pos;

public Vector3 vel;

public Vector3 acc;

public float mass;

}

We can then start our simulation setup code. We first start by creating our mesh vertices. We then create a ComputeBuffer,

which is how we store data in the GPU's memory(vram). It is basicaly an array, with two parameters:

count: the number of elements in the array.

stride: the size of an element in the array.

Since our vertices are floating point 3D vectors, the stride is 3 time the size of a float.

We use the

Material.SetBuffer

method to set our shader variable "quadVertices" to this buffer. Also, notice the OnDestroy() method. Since we allocated memory in the vram,

we need to release it when we are done with it. If we don't, Unity will give us a warning.

using UnityEngine;

public class NBodySimulationGPU : MonoBehaviour

{

[SerializeField] Material bodyMat;

[SerializeField] int numberOfBodies = 1;

ComputeBuffer quadVerticesBuffer;

const int vertexCount = 6;

readonly Bounds renderBounds = new Bounds(Vector3.zero, Vector3.one * 5000);//for frustrum culling

// Start is called before the first frame update

void Start()

{

SetupRenderShader();

}

void SetupRenderShader()

{

//Create our mesh vertices

Vector3[] quadVertices = new Vector3[]

{

new Vector3(-0.5f, 0.5f),

new Vector3(0.5f, 0.5f),

new Vector3(0.5f, -0.5f),

new Vector3(0.5f, -0.5f),

new Vector3(-0.5f, -0.5f),

new Vector3(-0.5f, 0.5f)

};

//set our vertices in the vram (GPU memory)

quadVerticesBuffer = new ComputeBuffer(quadVertices.Length, 3 * sizeof(float));

quadVerticesBuffer.SetData(quadVertices);

bodyMat.SetBuffer("quadVertices", quadVerticesBuffer);

}

// Update is called once per frame

void Update()

{

Graphics.DrawProcedural(bodyMat, renderBounds, MeshTopology.Triangles, vertexCount, numberOfBodies);

}

private void OnDestroy()

{

quadVerticesBuffer?.Release();

}

}

To have it work properly, create a new material and set it to use the shader above. Then, in the editor, set

the simulation's serialized field to the material just created.

Here is the result:

Now that we can render our bodies, lets write the simulation code. First we need to declare which function is

our main function, so we need to add

#pragma kernel functionName

We also need to declare

our Body struct to be able to use it in the shader. We then need our body array which is of type RWStructuredBuffer<Body>.

Above our main function, we need to define the number of threads to be run per group. This is has 3 dimensions, x, y, z and the

product of the 3 dimensions has to be less or equal to 1024. So why did we put (1024, 1, 1) ? Because this is also linked to how we will identify each body.

Notice that the parameter of our main function is uint3 id. This id will match the way we define numthreads. For example if we were using

data in 2 dimensions, such as an image, we would need two dimensions to identify a pixel (x, y). We would then declare numthreads in two

dimensions, with values for both x and y, and 1 for z. Similarly, with a 3d array, we would use all three dimensions. Since our array is one

dimensional, we only need the x component to index our array.

But what if we want to run more than 1024 bodies? Well this 1024 is the number of threads per group, but we can run multiple groups. This will become

clearer in the simulation code when we execute the compute shader, but we will run the minimum number of group to iterate through the whole array.

For example, if we have 2048 bodies, we would launch 2 groups, but if we have 2049, we would need to launch 3 groups.

Now that it's a bit clearer, we can write the main code. We know that we have an id which corresponds to an index in our array, so this will perform the same task

as the for loop in our update method from the last section. We only need to code the equivalent of KinematicsUpdate() that we had in Body, and it should work.

// Each #kernel tells which function to compile; you can have many kernels

#pragma kernel NBodyMain

struct Body

{

float3 pos; //position

float3 vel; //velocity

float3 acc; //acceleration

float mass;

};

RWStructuredBuffer<Body> bodies;

float DELTATIME; //time increment between simulation frames

float distanceThreshold;

int numBodies; //length of bodies array

[numthreads(1024, 1, 1)]

void NBodyMain(uint3 id : SV_DispatchThreadID)

{

int index = id.x;

float3 totalForce = float3(0, 0, 0);

for (int i; i < numBodies; i++)

{

if (i != index)//we dont compute gravity on self, this will result in division by zero

{

//calculate magnitude and direction of force

float3 directionVector = bodies[i].pos - bodies[index].pos;

float distance = length(directionVector);

if (distance < distanceThreshold)

continue;

totalForce += bodies[i].mass * bodies[index].mass * normalize(directionVector) / (distance * distance);

}

}

bodies[index].acc = totalForce / bodies[index].mass;

bodies[index].pos += DELTATIME * bodies[index].vel + 0.5f * DELTATIME * DELTATIME * bodies[index].acc;

bodies[index].vel += DELTATIME * bodies[index].acc;

}

Lets now go back to the simulation setup. Lets create the bodies in a grid, just like the previous section's final code.

After that, we need to know the index of our kernel, so we use Unity's FindKernel method. The rest is just to set the correct data in the

shader. The ComputeBuffer needs to be setup the same way as with the quads, with the stride of the Body object. Another important thing

is to set the buffer as Global. This will make sure that both the rendering and the compute shader access the same data. The last thing is

to calculate the number of groups we need. As explain previously, we need to launch enough groups to index all of our bodies. Since we have

1024 threads per group, the number of group we need is

numberOfBodies / 1024.

After adding the new functions below, we need

to set the ComputeShader in the serialized field from the editor.

using UnityEngine;

public class NBodySimulationGPU : MonoBehaviour

{

[SerializeField] int numberOfBodies = 625;

const float DELTATIME = 0.01f;

const float distanceThreshold = 0.05f;

const int numThreads = 1024;

const int gridSize = 25;

int kernel;

int numThreadGroups;

[SerializeField] ComputeShader compShader;

ComputeBuffer bodiesBuffer;

// Start is called before the first frame update

void Start()

{

SetupRenderShader();

SetupComputeShader();

}

void SetupComputeShader()

{

numberOfBodies = gridSize * gridSize;

Body[] bodies = new Body[numberOfBodies];

int k = 0;

for (int i = 0; i < gridSize; i++)

{

for (int j = 0; j < gridSize; j++)

{

Vector3 position = new Vector3(-gridSize / 2 + i, -gridSize / 2 + j) * 1.5f;

bodies[k] = new Body();

bodies[k].pos = position;

bodies[k].vel = Random.insideUnitSphere + Vector3.Cross(position, Vector3.back).normalized;

bodies[k++].mass = 1e-1f;

}

}

//Instantiate compute shader

kernel = compShader.FindKernel("NBodyMain");

bodiesBuffer = new ComputeBuffer(bodies.Length, 10 * sizeof(float));//Vector3 has 3floats => 3float * 3Vector3 + 1float = 10 float

bodiesBuffer.SetData(bodies);

Shader.SetGlobalBuffer("bodies", bodiesBuffer);//global buffer because rendering shader also uses this buffer

compShader.SetBuffer(kernel, "bodies", bodiesBuffer);

compShader.SetFloat("DELTATIME", DELTATIME);

compShader.SetFloat("distanceThreshold", distanceThreshold);

compShader.SetInt("numBodies", numberOfBodies);

numThreadGroups = Mathf.CeilToInt((float)numberOfBodies / numThreads);

}

// Update is called once per frame

void Update()

{

compShader.Dispatch(kernel, numThreadGroups, 1, 1);

Graphics.DrawProcedural(bodyMat, renderBounds, MeshTopology.Triangles, vertexCount, numberOfBodies);

}

private void OnDestroy()

{

bodiesBuffer?.Release();

quadVerticesBuffer?.Release();

}

}

Here is the result:

As we can see in the video, with the same amount of bodies we had in the first section, our fps came all the way back up to around 600.

Lets see how many bodies we can simulate before we get back to 20 fps.

This is a 240x240 grid, for a total of 57,600 bodies!

There are two things to note. First is that in the first part, the simulation was cpu-bound, so recording the screen with my GPU did not

affect performance. However, this time, since the simulation is GPU-bound, recording makes me lose about 2 fps.

Second is that in the first

part, we used unity's Time.deltaTime for the simulation time step. This made the simulation run at the same rate regardless of the fps,

at the cost of precision. However this time, the time step is fixed. Therefore, the lower the fps, the slower the simulation will run.

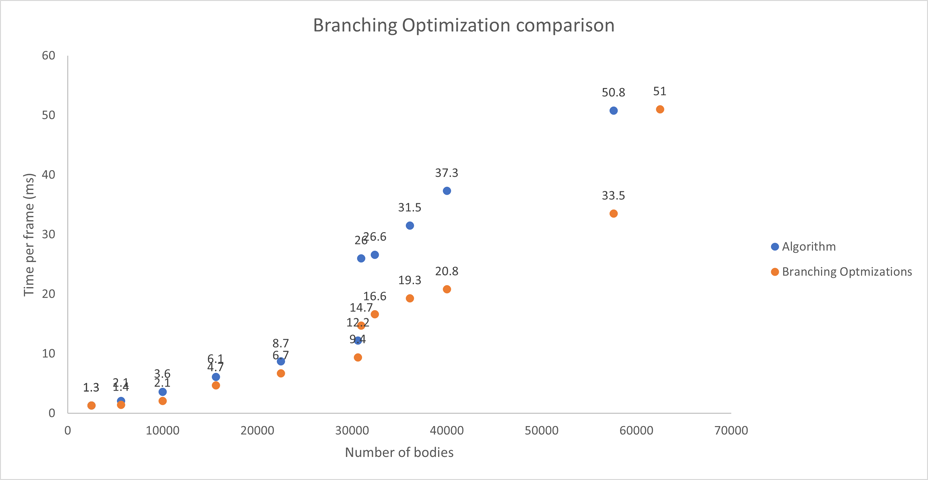

Lets see how this compares with our O(n2) algorithm from earlier.

It is possible to further optimize: Notice that our two "if" statement basically achieve the same thing. However, branching on the GPU can be costly, and

the less "if" statements there are, the better it is. We can remove our first "if", and our "continue" statement, so that we only have

one "if" in our for loop.

[numthreads(1024, 1, 1)]

void NBodyMain(uint3 id : SV_DispatchThreadID)

{

int index = id.x;

float3 totalForce = float3(0, 0, 0);

for (int i; i < numBodies; i++)

{

//calculate magnitude and direction of force

float3 directionVector = bodies[i].pos - bodies[index].pos;

float distance = length(directionVector);

if (distance > distanceThreshold)

totalForce += bodies[i].mass * bodies[index].mass * normalize(directionVector) / (distance * distance);

}

bodies[index].acc = totalForce / bodies[index].mass;

bodies[index].pos += DELTATIME * bodies[index].vel + 0.5f * DELTATIME * DELTATIME * bodies[index].acc;

bodies[index].vel += DELTATIME * bodies[index].acc;

}

By removing these useless branching operations, the simulation of 240x240 went from 53.4ms to 33.5ms.

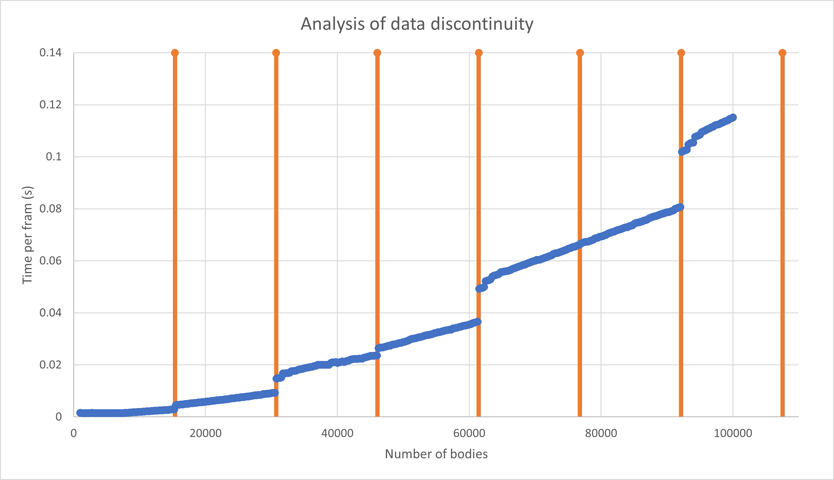

Although this is much better, I do not fully understand the data I have. There is a weird jump between a 175x175 grid and a 176x176 grid

where the time for a frame increases greatly. A that point the number of thread groups goes from 30(30625/1024) to 31(30976/1024). I tried

to look for a reason and found that my GPU has 15 Streaming Multiprocessors. The numbers somewhat line up but as I do not understand GPU architecture,

I do not know if these numbers are linked.

I made a script to run the simulation multiple times and log the data for me. The simulation was ran from 1000

to 100000 bodies, in increments of 250. The time per frame was averaged over 3000 frames. The orange lines in the chart are where the number of thread groups

are a multiple of 15(15,30,45,60,75,90,105).

It seems like the data jumps a little at multiples of 15, and a lot at multiples of 30. I'm not sure of the exact reason, but it does seem to correlate

with the fact that there are 15 Streaming Multiprocessors in my GPU.

Lets try to modify how we generate the bodies for more interesting results:

I made the camera spin around the simulation and adjusted the shader because it was way too bright.

A circle:

A sphere:

A cube with different initial velocities.

Notice that in the cube simulation, the bodies go so far that our quad is not big enough to be seen. We can make them bigger by scaling our vertices

in our setup method.

We can see the structure of the simulation much better this way.

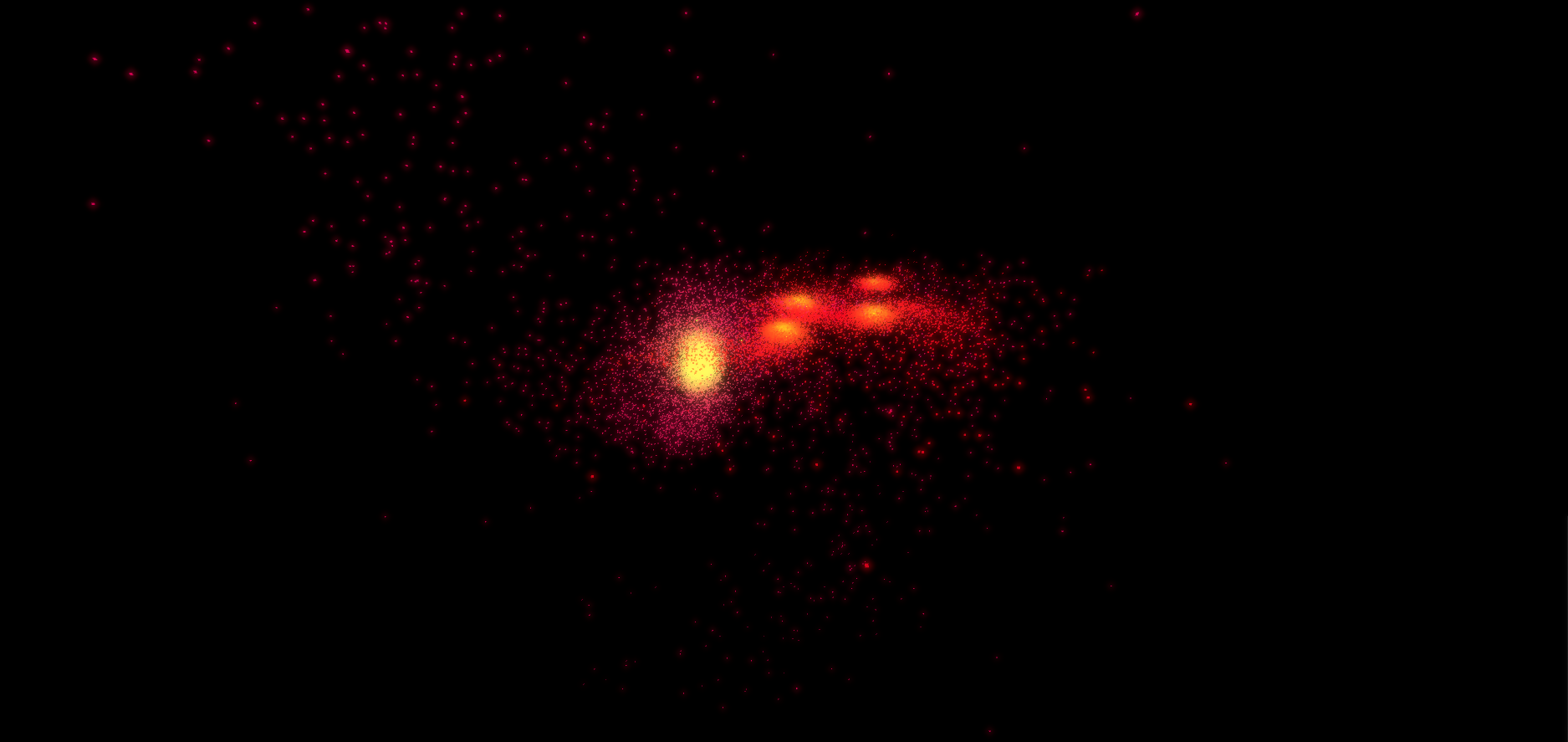

Next, lets try to make two "galaxies" collide:

Here is the final code for the collision of the two "galaxies".

Shader "Unlit/ProceduralBody"

{

Properties

{

_Color("Main Color", Color) = (1,1,1,1)

}

SubShader

{

Tags { "Queue" = "Geometry" "RenderType" = "Opaque"}

Pass

{

CGPROGRAM

#pragma target 5.0

#pragma vertex vert

#pragma fragment frag

#include "UnityCG.cginc"

struct Body

{

float3 pos;//position

float3 vel;//velocity

float3 acc;//acceleration

float mass;

};

struct v2f

{

uint id : SV_INSTANCEID;

float4 vertex : SV_POSITION;

};

float3 faceCamera(float3 position, float3 centerPosition)

{

//get direction to face

float3 norm = normalize(_WorldSpaceCameraPos - centerPosition);

//get axis to rotate on

//axis to rotate on is orthogonal to original facing direction and new direction to face

float3 axis = normalize(cross(norm, float3(0, 0, 1)));

//since both vector are normalized, no need to divide by magnitude

// a . b = |A|*|B|*cos(angle)

float angle = acos(dot(norm, float3(0, 0, -1)));

//rotate position of vertex

//https://en.wikipedia.org/wiki/Rotation_matrix#Rotation_matrix_from_axis_and_angle

float3 rotatedPos = axis * (dot(axis, position)) +

cross(cos(angle) * (cross(axis, position)), axis) +

sin(angle) * (cross(axis, position));

return rotatedPos;

}

float4 _Color;

StructuredBuffer<float3< quadVertices;

StructuredBuffer<Body> bodies;

//vertex_id-> id of vertex. We have six vertex so this will range from 0 to 5.

//instance_id-> id of instance. The number of instances is the number of bodies we have. This will range from 0 to numberOfBodies-1.

v2f vert(uint vertex_id: SV_VertexID, uint instance_id : SV_InstanceID)

{

v2f o;

//get vertex position

float3 position = quadVertices[vertex_id];

//make quad face camera

position = faceCamera(position, bodies[instance_id].pos);

//add body position

position += bodies[instance_id].pos;

//convert the vertex position from world space to clip space

o.vertex = UnityObjectToClipPos(float4(position.xyz, 1));

o.id = instance_id;

return o;

}

fixed4 frag(v2f i) : SV_Target

{

//modify color depending on acceleration and mass

fixed accCol = length(bodies[i.id].acc)*1.2f;

fixed massCol = bodies[i.id].mass*1.25f;

return _Color + fixed4(0, accCol, massCol, 1);

}

ENDCG

}

}

}

// Each #kernel tells which function to compile; you can have many kernels

#pragma kernel NBodyMain

struct Body

{

float3 pos; //position

float3 vel; //velocity

float3 acc; //acceleration

float mass;

};

RWStructuredBuffer<Body> bodies;

float DELTATIME; //time increment between simulation frames

float distanceThreshold;

int numBodies; //length of bodies array

[numthreads(1024, 1, 1)]

void NBodyMain(uint3 id : SV_DispatchThreadID)

{

int index = id.x;

float3 totalForce = float3(0, 0, 0);

for (int i; i < numBodies; i++)

{

//calculate magnitude and direction of force

float3 directionVector = bodies[i].pos - bodies[index].pos;

float distance = length(directionVector);

if (distance > distanceThreshold)

totalForce += bodies[i].mass * bodies[index].mass * normalize(directionVector) / (distance * distance);

}

bodies[index].acc = totalForce / bodies[index].mass;

bodies[index].pos += DELTATIME * bodies[index].vel + 0.5f * DELTATIME * DELTATIME * bodies[index].acc;

bodies[index].vel += DELTATIME * bodies[index].acc;

}

using UnityEngine;

public class NBodySimulationGPU : MonoBehaviour

{

[SerializeField] Material bodyMat;

[SerializeField] int numberOfBodies = 625;

[SerializeField] ComputeShader compShader;

[SerializeField] float quadSize = 1;

readonly Bounds renderBounds = new Bounds(Vector3.zero, Vector3.one * 100000);//for frustrum culling

const float DELTATIME = 0.05f;

const float distanceThreshold = 0.05f;

const int numThreads = 1024;

const int gridSize = 175;

const int vertexCount = 6;

ComputeBuffer quadVerticesBuffer;

ComputeBuffer bodiesBuffer;

int kernel;

int numThreadGroups;

// Start is called before the first frame update

void Start()

{

SetupRenderShader();

SetupComputeShader();

}

void SetupComputeShader()

{

numberOfBodies = gridSize * gridSize;

Body[] bodies = new Body[numberOfBodies];

int firstGalaxyGridSize = 70;

int secondGalaxyGridSize = gridSize - firstGalaxyGridSize;

int k = 0;

//create first galaxy

for (int i = 0; i < firstGalaxyGridSize; i++)

{

for (int j = 0; j < gridSize; j++)

{

Vector3 position = new Vector3(-gridSize / 2 + i, -gridSize / 2 + j) * 1.5f;

bodies[k] = new Body();

bodies[k].pos = position + Vector3.left * 500;

bodies[k].vel = Random.insideUnitSphere + Vector3.Cross(position, Vector3.back).normalized * 3 + Vector3.right * 2f + Vector3.up*1.5f;

bodies[k++].mass = 3e-1f;

}

}

//create second galaxy

for (int i = 0; i < secondGalaxyGridSize; i++)

{

for (int j = 0; j < gridSize; j++)

{

Vector3 position = Quaternion.Euler(90,0,0) * Random.insideUnitCircle * gridSize* 0.5f * 1.5f;

bodies[k] = new Body();

bodies[k].pos = position+ Vector3.right * 500;

bodies[k].vel = Random.insideUnitSphere + Vector3.Cross(position, Vector3.up).normalized * 3 + Vector3.left * 2f;

bodies[k++].mass = 1e-1f;

}

}

//Instantiate compute shader

kernel = compShader.FindKernel("NBodyMain");

bodiesBuffer = new ComputeBuffer(bodies.Length, 10 * sizeof(float));//Vector3 has 3floats => 3float * 3Vector3 + 1float = 10 float

bodiesBuffer.SetData(bodies);

Shader.SetGlobalBuffer("bodies", bodiesBuffer);//global buffer because rendering shader also uses this buffer

compShader.SetBuffer(kernel, "bodies", bodiesBuffer);

compShader.SetFloat("DELTATIME", DELTATIME);

compShader.SetFloat("distanceThreshold", distanceThreshold);

compShader.SetInt("numBodies", numberOfBodies);

numThreadGroups = Mathf.CeilToInt((float)numberOfBodies / numThreads);

}

void SetupRenderShader()

{

//Create our mesh vertices

Vector3[] quadVertices = new Vector3[]

{

new Vector3(-0.5f, 0.5f)*quadSize,

new Vector3(0.5f, 0.5f)*quadSize,

new Vector3(0.5f, -0.5f)*quadSize,

new Vector3(0.5f, -0.5f)*quadSize,

new Vector3(-0.5f, -0.5f)*quadSize,

new Vector3(-0.5f, 0.5f)*quadSize

};

//set our vertices in the vram (GPU memory)

quadVerticesBuffer = new ComputeBuffer(quadVertices.Length, 3 * sizeof(float));

quadVerticesBuffer.SetData(quadVertices);

bodyMat.SetBuffer("quadVertices", quadVerticesBuffer);

}

// Update is called once per frame

void Update()

{

compShader.Dispatch(kernel, numThreadGroups, 1, 1);

Graphics.DrawProcedural(bodyMat, renderBounds, MeshTopology.Triangles, vertexCount, numberOfBodies);

}

//Release GPU memory when stopping the simulation

private void OnDestroy()

{

bodiesBuffer?.Release();

quadVerticesBuffer?.Release();

}

}

Hopefully this demonstration was able to show the performance advantages of GPGPU for parallel algorithms.

Although the performance improvement was massive, it would also be interesting to look into the

Barnes-Hut algorithm, which optimizes the computation

of the N-Body problem. It runs in O(nlog(n)) instead of O(n2), and it is also possible to run it in

parallel on the gpu.

Also, to get a nice visual, adding a "bloom effect" by blending a blurred version of the image makes the quads look like they emit light. :)

FM